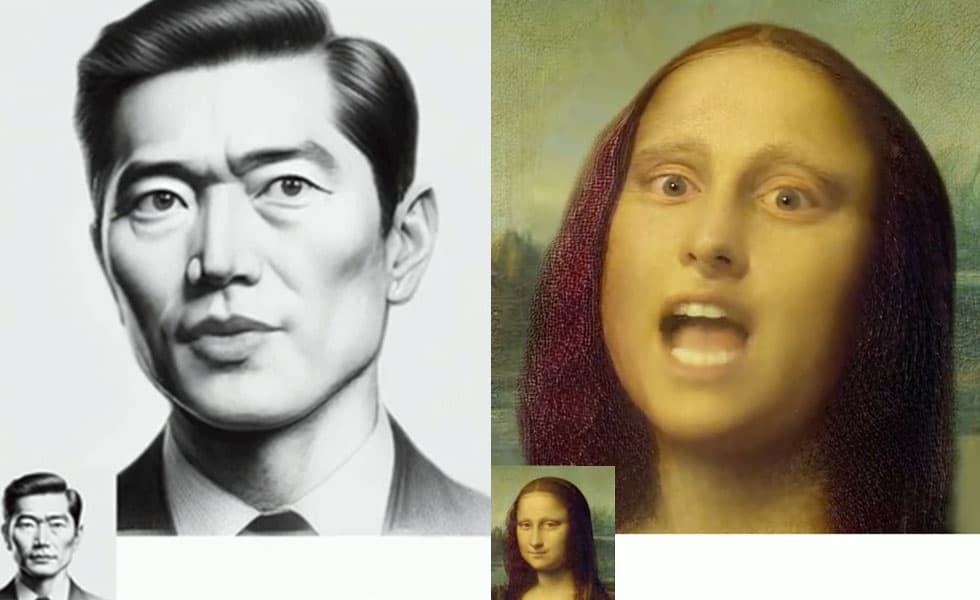

(Original Image from Microsoft Research)In the rapidly evolving landscape of artificial intelligence, Microsoft’s VASA-1 stands out as a groundbreaking development. This AI model, developed by Microsoft Research Asia, harnesses the power of deep learning to animate static images using just a single photo and a corresponding audio track. This image-to-video AI model does more than just mimic speech; it can create a full range of facial expressions and natural head movements, making the videos impressively realistic.

As brands continue seeking more innovative and interactive engagement methods, VASA-1 opens new frontiers, especially in fan engagement experiences and marketing strategies.

What is Microsoft’s VASA-1?

Microsoft’s VASA-1 is an AI model that can generate realistic talking head videos from a single static image of a person and an audio track. It generates an animated image of the person’s head in real time and then uses advanced algorithms to synchronize lip movements with a preexisting audio track to produce expressive facial movements and head motions, creating lifelike digital avatars—or talking heads or even lip-syncing heads.

For Microsoft’s VASA-1 to function, it requires:

- A Static Image: A high-quality, clear portrait photo of the person who is to be animated.

- An Audio Track: A speech audio clip that the image is supposed to “speak.” This audio should be clear and well-recorded to ensure precise lip synchronization.

- Processing Power: Adequate computational resources are necessary to handle the AI’s operations, which involve real-time postproduction video generation and the application of complex machine learning models.

Interactive Fan Engagement Experiences

The entertainment industry, particularly music, sports, and film, can leverage VASA-1 to create novel fan experiences. Imagine attending a concert where past legends can be brought back to life, singing their classic hits synchronized perfectly with archival audio recordings. Sports fans could receive personalized messages from their favorite athletes as a realistic video.

We recently created an experience where guests needed to register to take part in the fan experience. We utilized text-to-speech Natural Language Processing to power the voice-over component, which is called the actual play-by-play results of a guest’s performance during an interactive game. We hired voice talent as the announcer’s voice. This was then used to train our AI engine to call play-by-play action in real-time. This included the ability to announce the player’s name when the game starts, as well as to add colorful commentary and even create nicknames for the guests based on their performance.

Adding a visual element leveraging Microsoft’s VASA-1 would have enhanced the user experience, creating a more believable, immersive fan engagement. This visual element could also have been used to create bespoke videos to share on a guest’s social media platform.

Marketing and Advertising

The implications of VASA-1 in marketing are vast. Brands could create more personalized advertising campaigns that feature realistic interactions with brand ambassadors or virtual customer service representatives who respond to queries with human-like expressions and movements. Such personalized interactions are likely to increase customer engagement and boost brand loyalty.

VASA-1 allows brands to produce hyper-realistic animations of brand ambassadors or fictional characters that can interact with consumers in a lifelike manner. This capability can be leveraged to deliver personalized messages, product endorsements, and interactive advertisements that capture consumers’ attention more effectively than traditional media. The realistic animations can be used in digital ads, social media platforms, and interactive billboards, offering a novel way to engage potential customers.

Having an on-camera spokesperson or character talking to you is powerful. CGI avatars have advanced, however this new approach using AI and a singular static image as the based model is extremely powerful on the production side and opens up all kinds of potential.

Furthermore, VASA-1 can revolutionize the way products are demonstrated. Marketers can use AI to create lifelike avatars of models who can then showcase apparel or other products in detailed, realistic videos. This application could extend to online shopping experiences, where customers can upload their photo and include their reviews, significantly enhancing the virtual shopping experience.

Another standout opportunity is supporting real-time applications such as live customer service interactions. Brands can use this technology to create virtual customer service agents that respond to inquiries with human-like expressions and movements, providing a more pleasant and engaging customer service experience.

Challenges and Considerations

While the potential uses of VASA-1 are exciting, they also come with ethical considerations. The ability to create hyper-realistic videos effortlessly raises concerns about the technology’s misuse to spread misinformation or create fraudulent content. Companies must implement strict usage guidelines and perhaps integrate digital watermarking to ensure the origins of AI-generated content are always known and traceable.

Moreover, there are technical challenges. The more realistic these animations become, the greater the processing power required to generate and display them. Ensuring that these technologies can be run efficiently on a wide range of devices will be key to their widespread adoption.

Not Ready for Prime Time

VASA is currently just a research demonstration. Microsoft does not plan to release the product or allow others to use the API; essentially, it just wants to show off its lip-syncing model capabilities. As the technology evolves, it will offer even more sophisticated tools for marketers and advertisers to create engaging, innovative content that resonates with consumers on a deeper level. Microsoft’s VASA-1 research white paper – https://www.microsoft.com/en-us/research/project/vasa-1/